Every six months or so, I found myself in the same spot. A new release was out, the features were solid, the engineering work was impressive… and yet the same questions kept coming back from sales, customers, and even internal teams.

“What actually changed?”

“What should I care about?”

“How do I explain this without reading release notes out loud?”

That’s the gap the “What’s New” decks were meant to close. Not as a one-off presentation, but as a repeatable way to tell the product story in a way that made sense to real humans — especially the ones who didn’t live and breathe the roadmap every day.

The Challenge

The problem wasn’t that the releases were bad. Quite the opposite. The problem was volume.

Enterprise software ships on a predictable cadence. New features, enhancements, deprecations, tech previews — all of it lands on a schedule whether anyone is ready or not. By the time a release goes GA, teams are already juggling conferences, roadmap conversations, internal launches, and whatever fire happens to be burning that week.

What I kept seeing was this:

- Release notes were thorough, but overwhelming

- Sales teams wanted a clear story, not a changelog

- Customers cared about impact, not every individual bullet point

And on top of that, the audience was never just one type of person. A single update had to make sense to:

- A practitioner watching live

- A sales engineer skimming slides before a customer call

- A customer catching the replay two weeks later

Without a consistent way to frame what mattered most, every release risked becoming noise — even when the work underneath it was genuinely exciting.

The Approach

After a few of these updates, it became obvious that the problem wasn’t the content — it was the format.

Every product team I worked with had the same raw ingredients: feature lists, engineering notes, roadmap context, and a rough idea of who the audience was. What kept breaking down was the handoff between knowing what shipped and explaining why anyone should care.

So instead of treating each update as a brand-new presentation, I started thinking in terms of a system.

The goal was simple:

- Start with why this matters, not just what shipped

- Curate a small number of changes that actually moved the needle

- Design once, then reuse across multiple audiences and channels

That meant forcing some discipline. Not every feature made the cut. Not every slide was technical. And every deck had to work in more than one context — live presentations, recorded webinars, internal enablement, and on-demand viewing.

I leaned on Pragmatic Marketing principles here, but quietly. Personas shaped what made it into the deck. Market problems dictated framing. The framework stayed in the background while the story stayed front and center.

Over time, this approach turned into a repeatable model I could apply regardless of product, release size, or company. The examples changed. The structure didn’t.

Key Deliverables

Once the structure was in place, the actual outputs became much easier — and more consistent — to produce. Instead of reinventing the wheel every release, each update followed the same basic anatomy, even as the content changed.

At a high level, the system produced:

A Modular “What’s New” Deck

Each deck followed a predictable flow:

- Context first: what kind of release this is and who it’s for

- Top themes: a small number of changes that actually matter to buyers

- Selective depth: enough detail to be credible without overwhelming

- What’s next: timelines, previews, or things to keep an eye on

This made the deck usable whether someone watched live, skimmed slides before a call, or jumped to a specific section on replay.

Live and Recorded Presentations

The same deck was designed to work on-camera. That meant:

- Slides that supported narration instead of replacing it

- Clear transitions for live discussion and Q&A

- Natural breakpoints for chapter markers and replays

In practice, this allowed a single narrative to power live webinars, recorded updates, and shorter clips without rewriting the story each time.

Reusable Enablement Assets

Because the structure stayed consistent, pieces of the deck could be lifted and reused:

- Sales teams pulled slides directly into customer conversations

- Partners used trimmed versions for their own briefings

- Marketers repurposed sections into blog posts, videos, or follow-up content

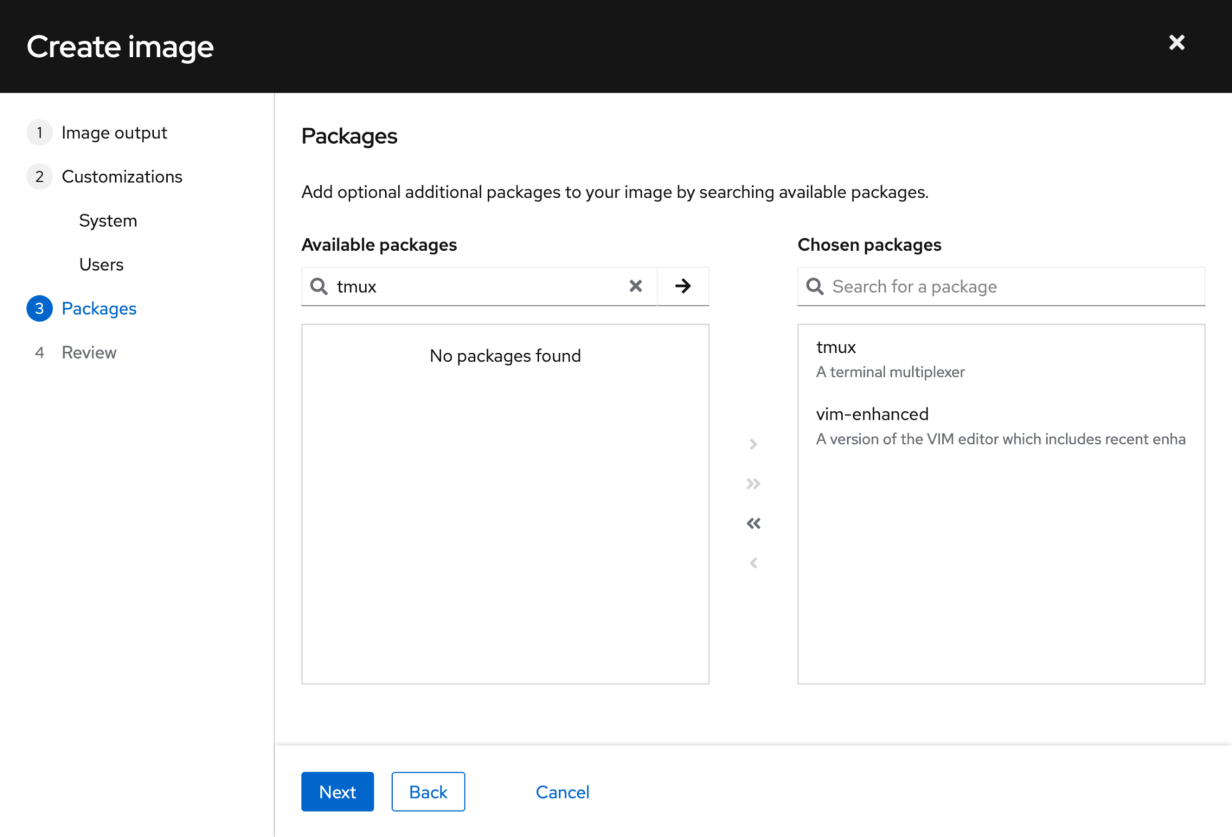

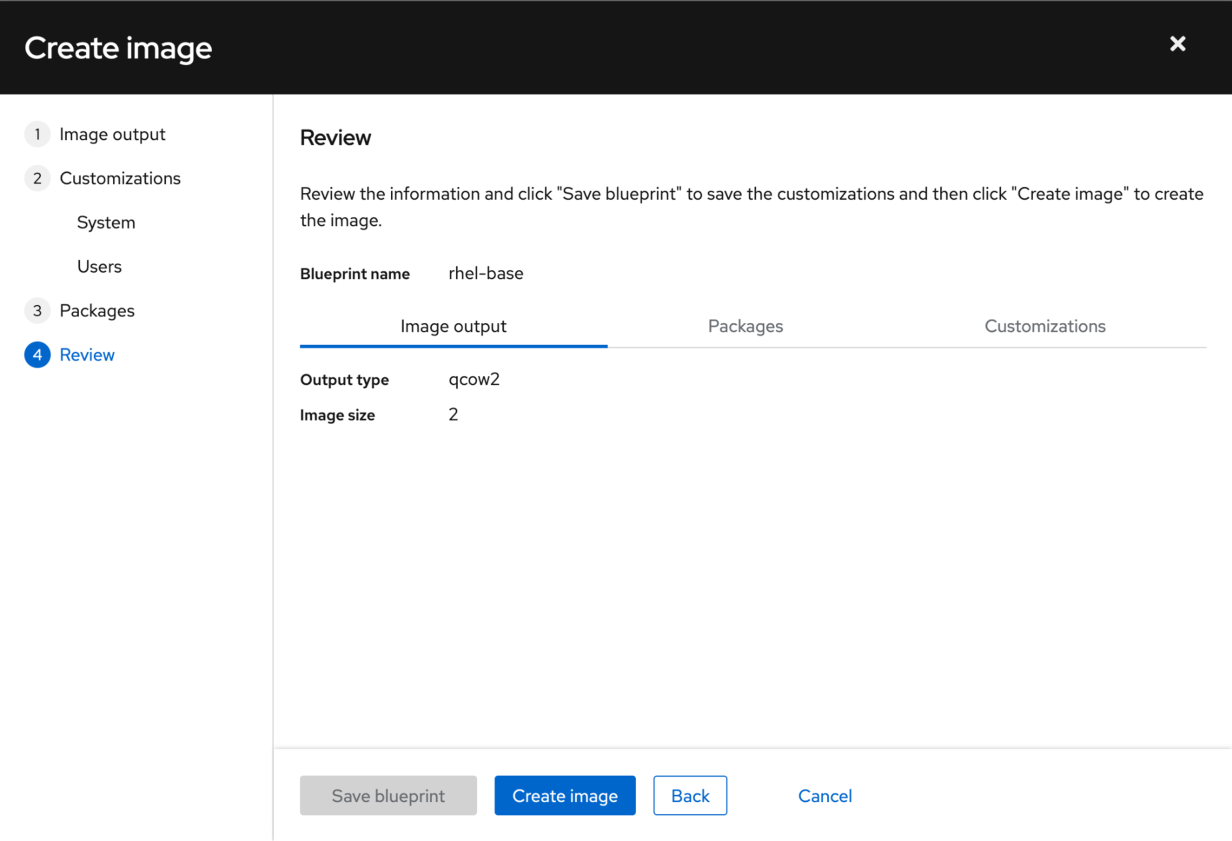

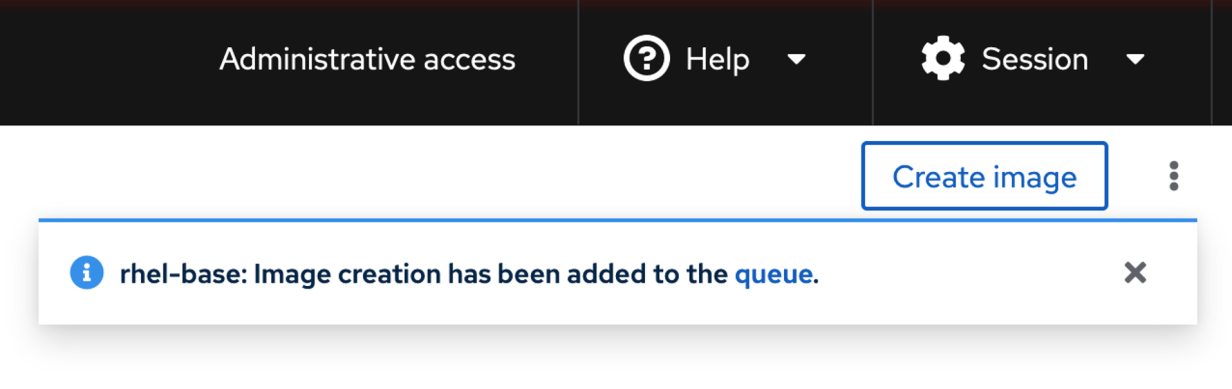

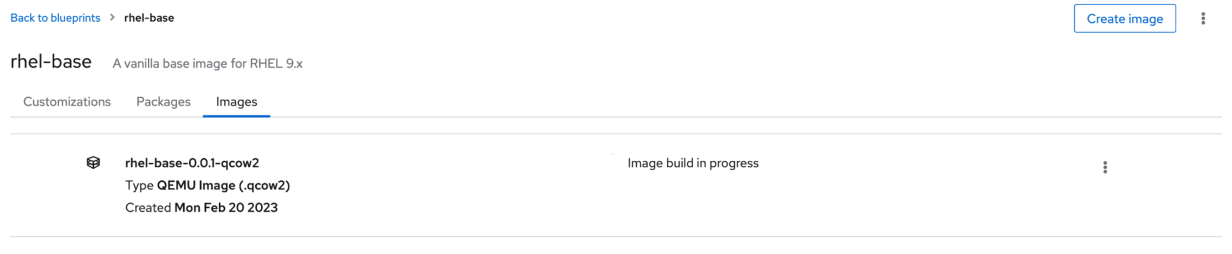

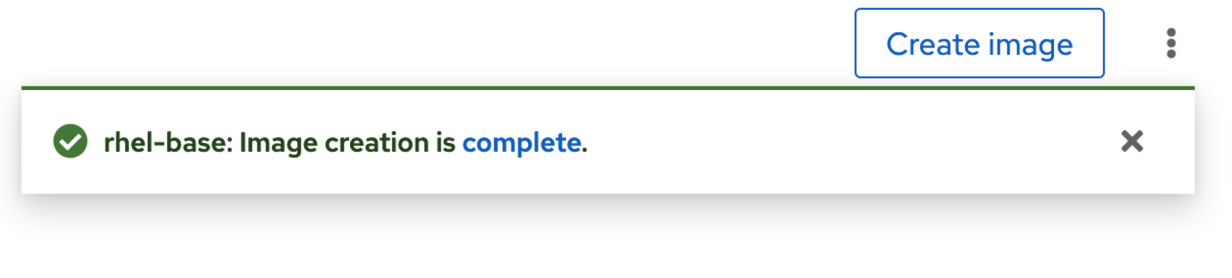

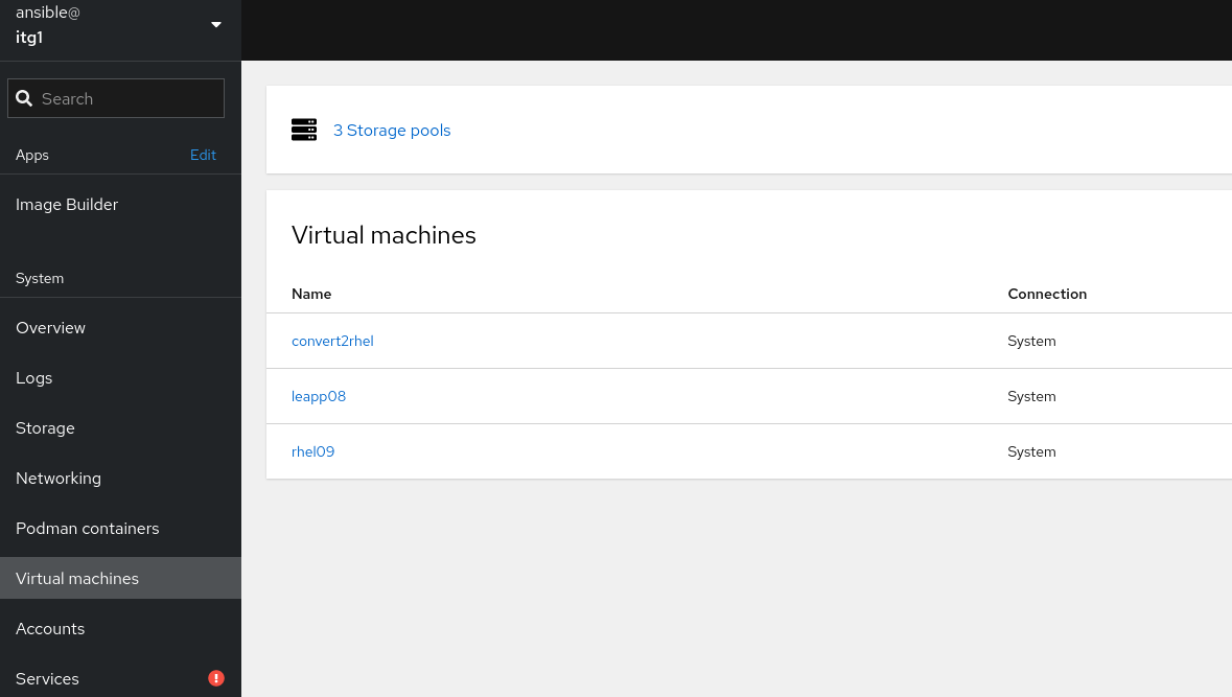

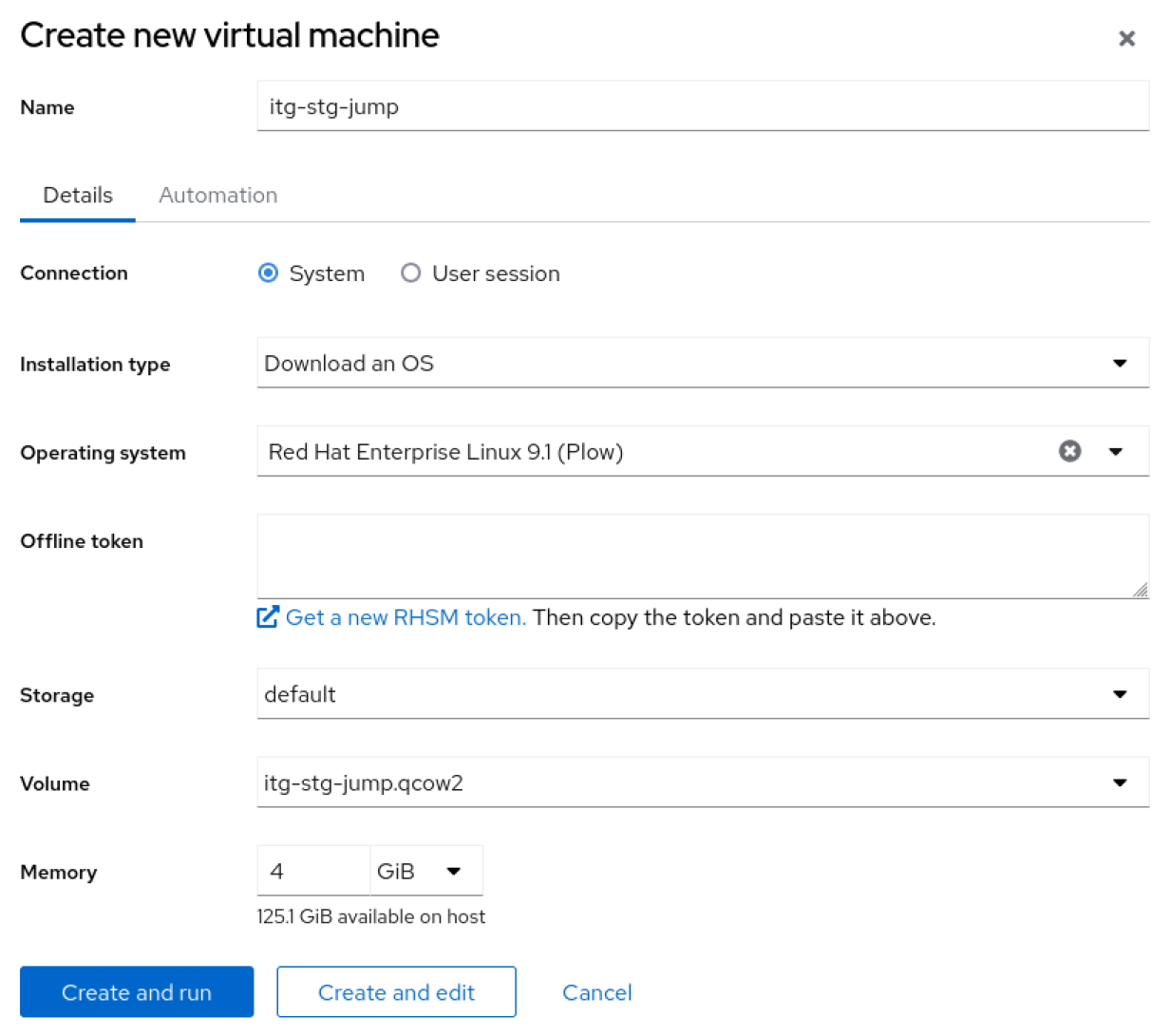

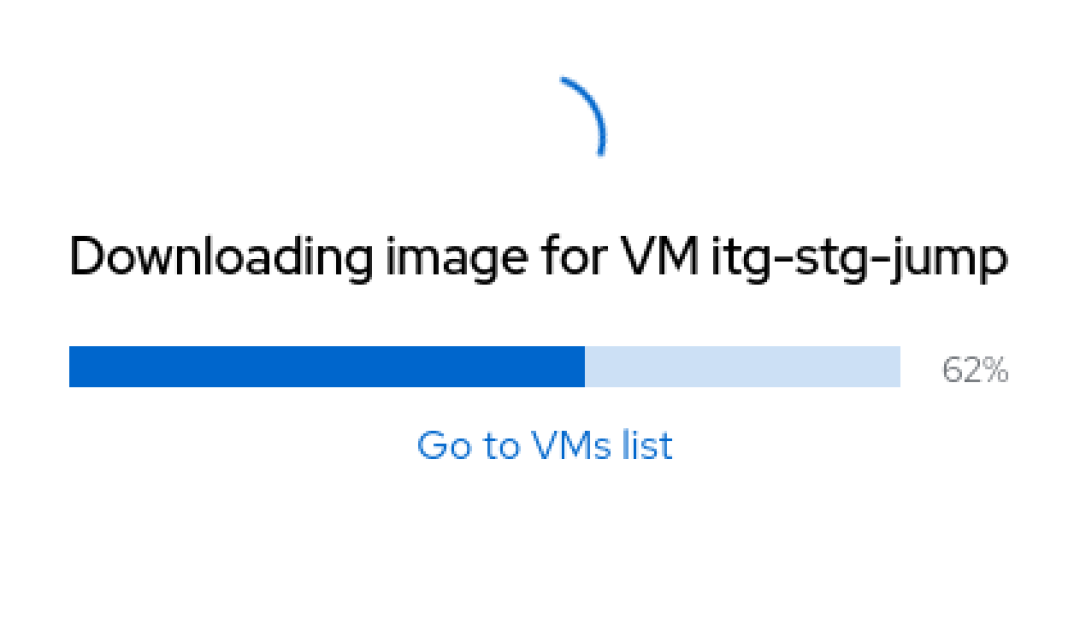

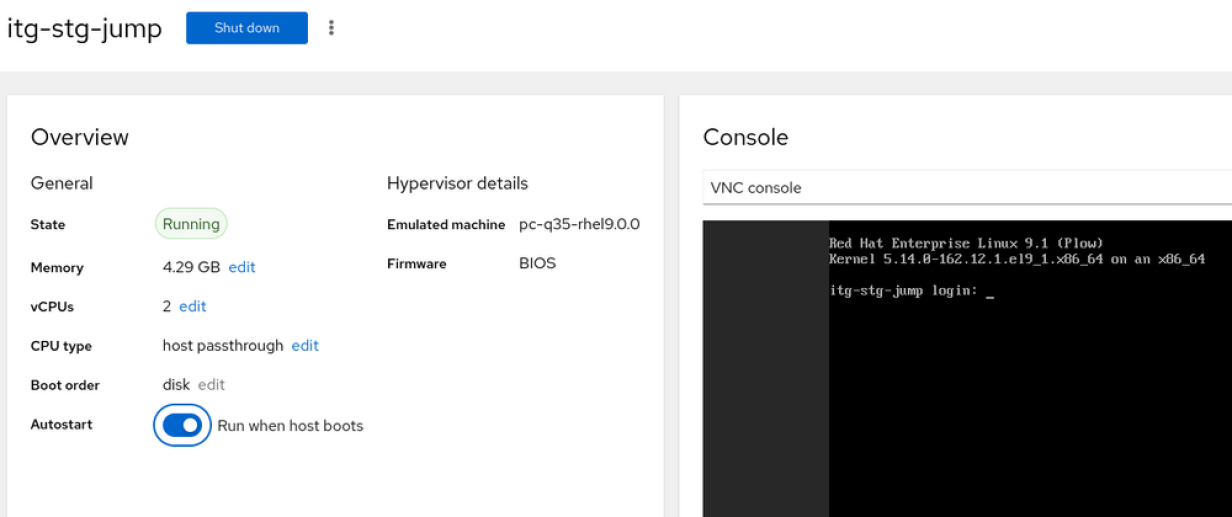

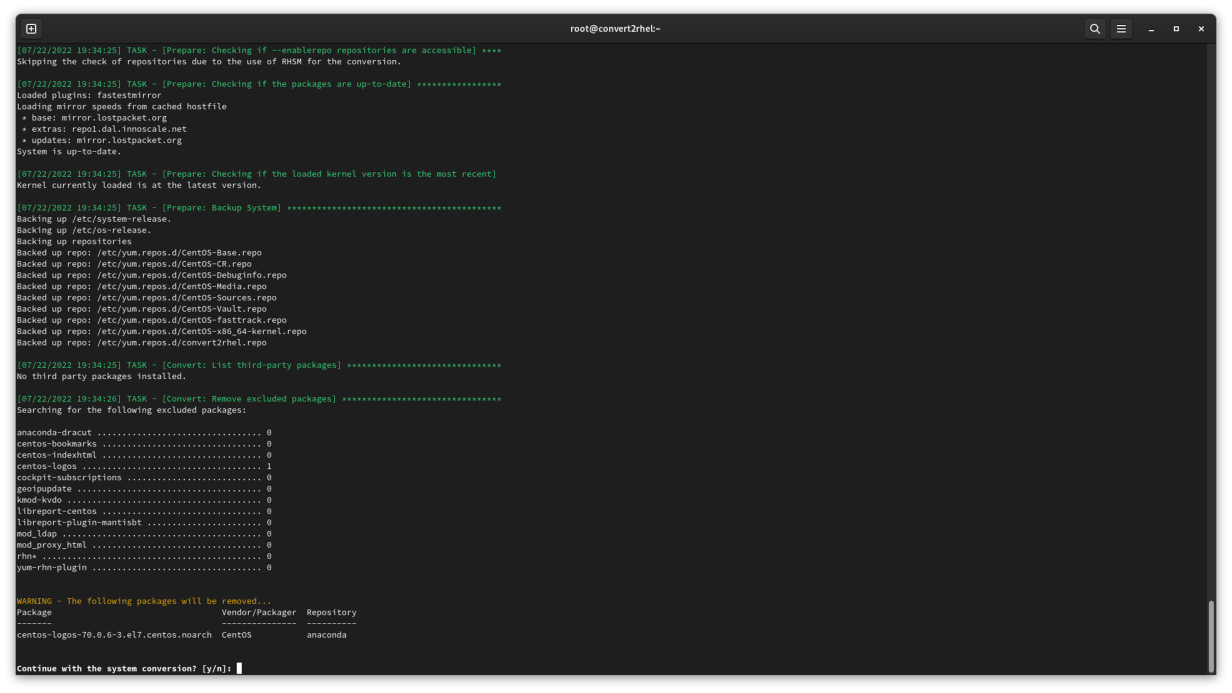

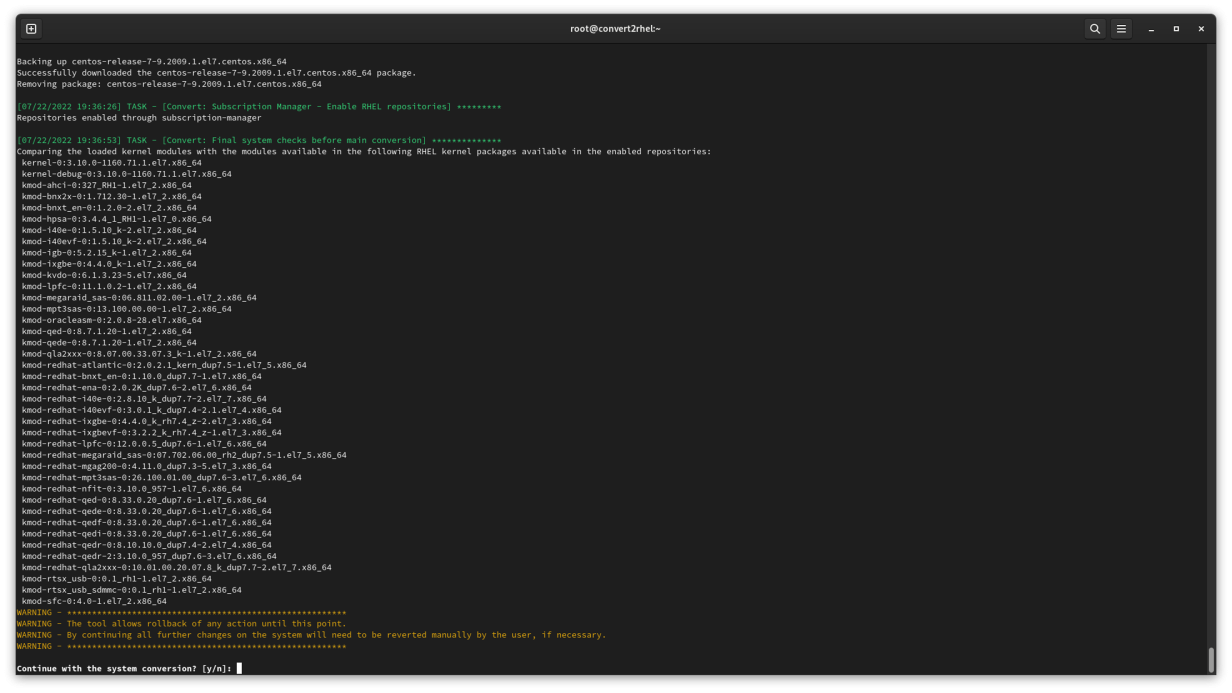

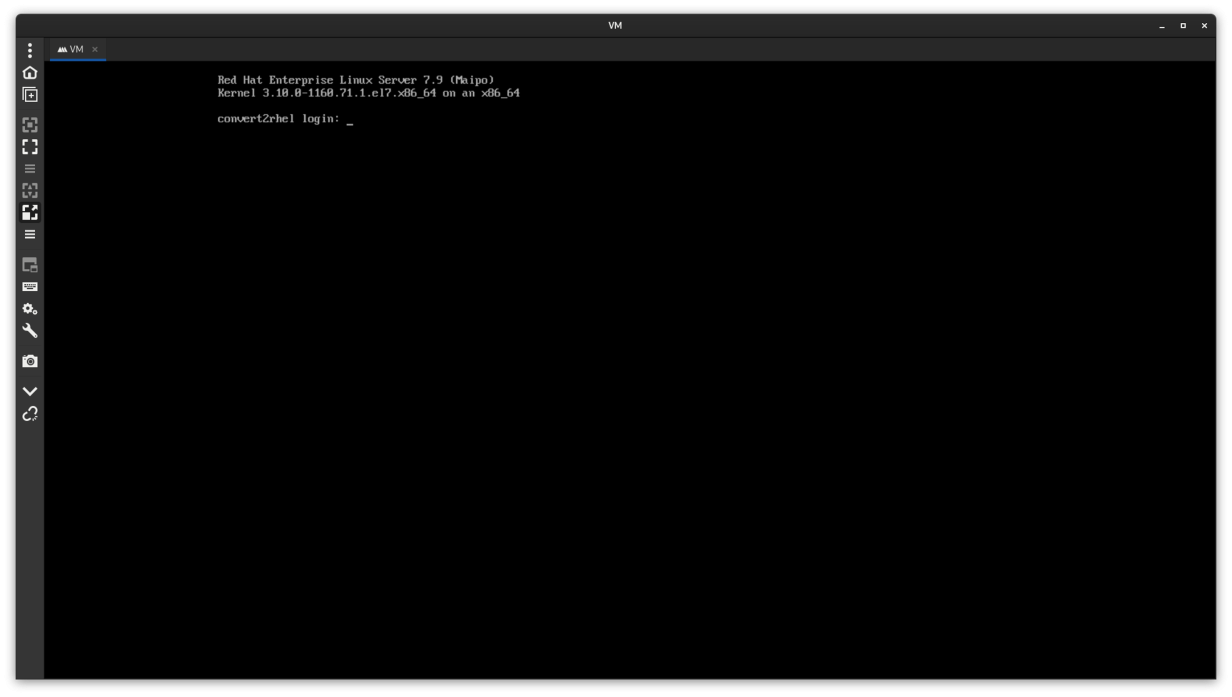

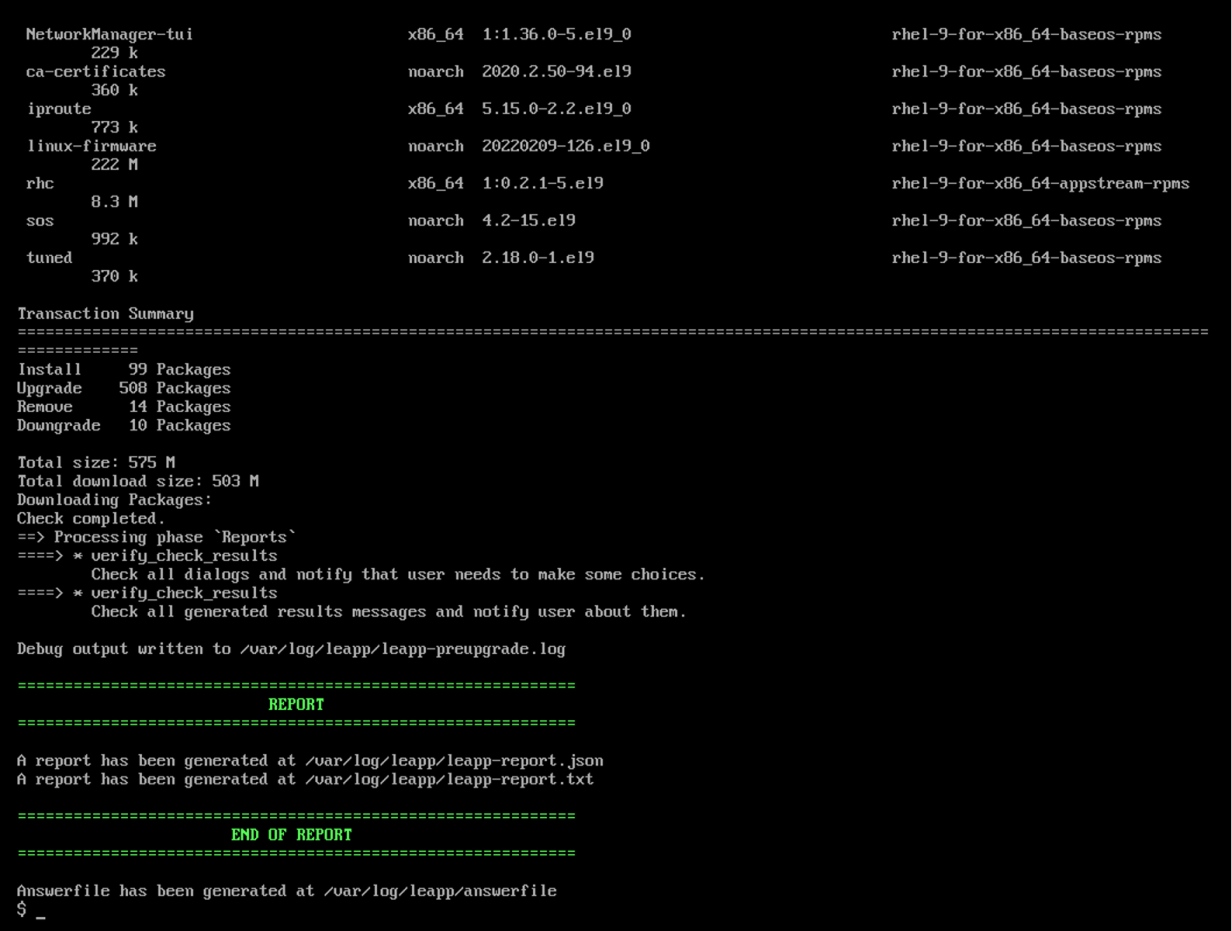

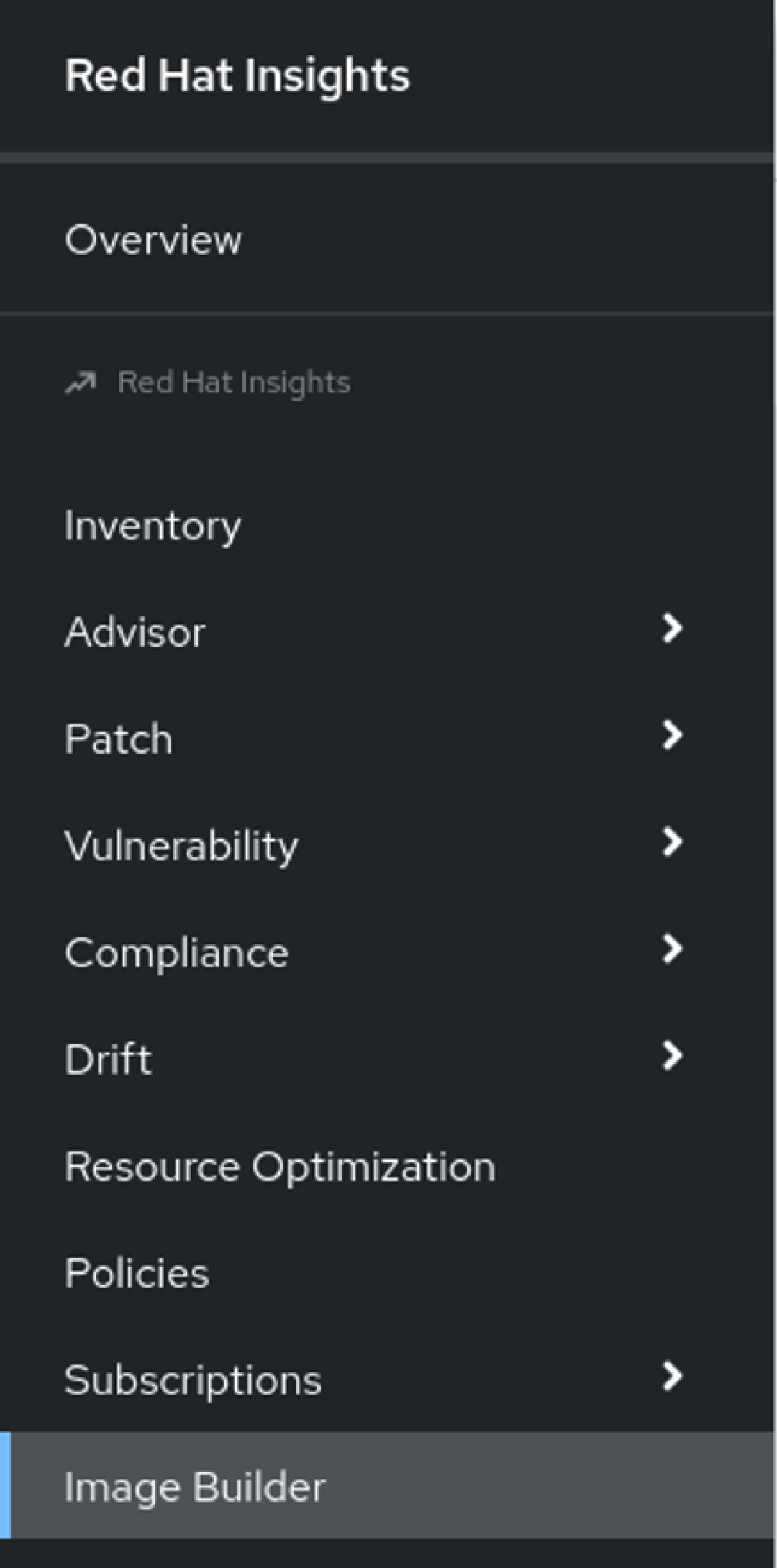

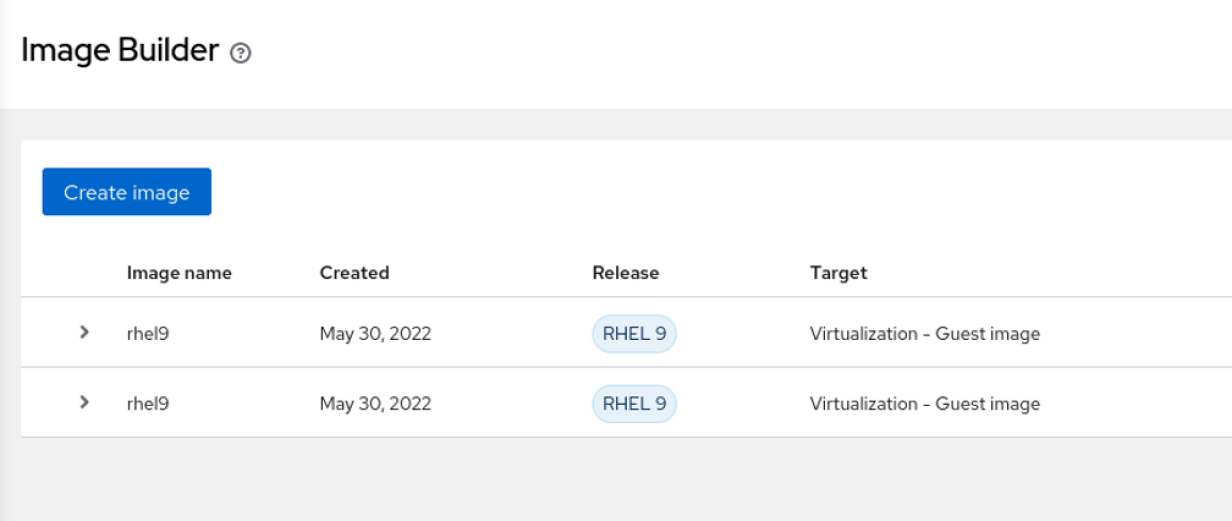

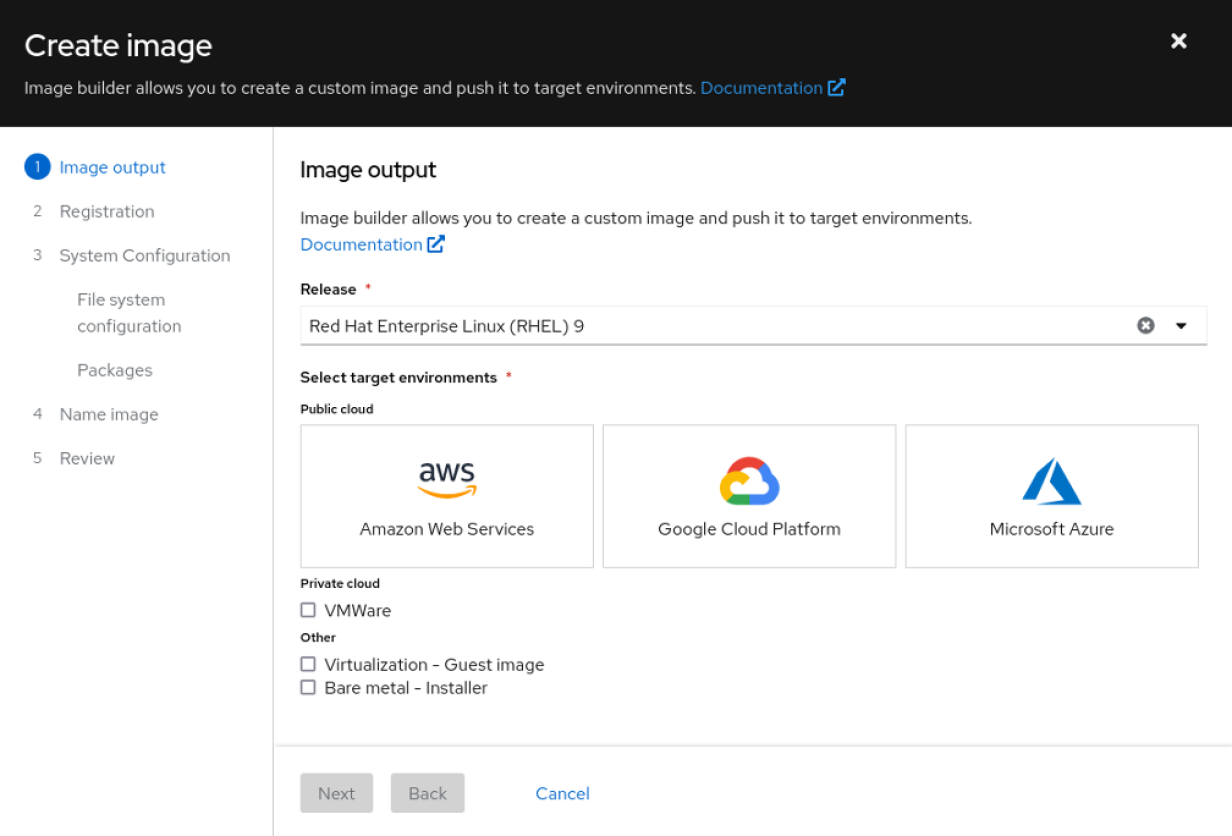

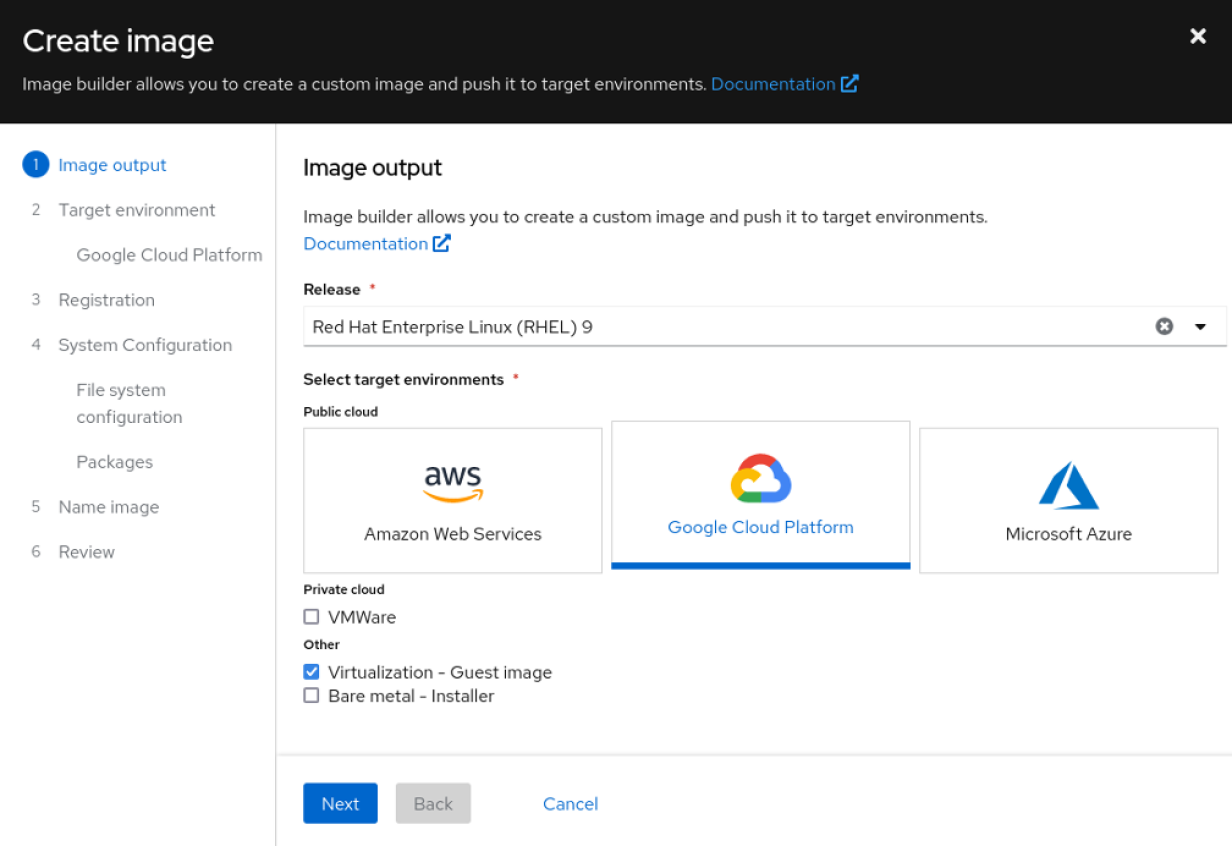

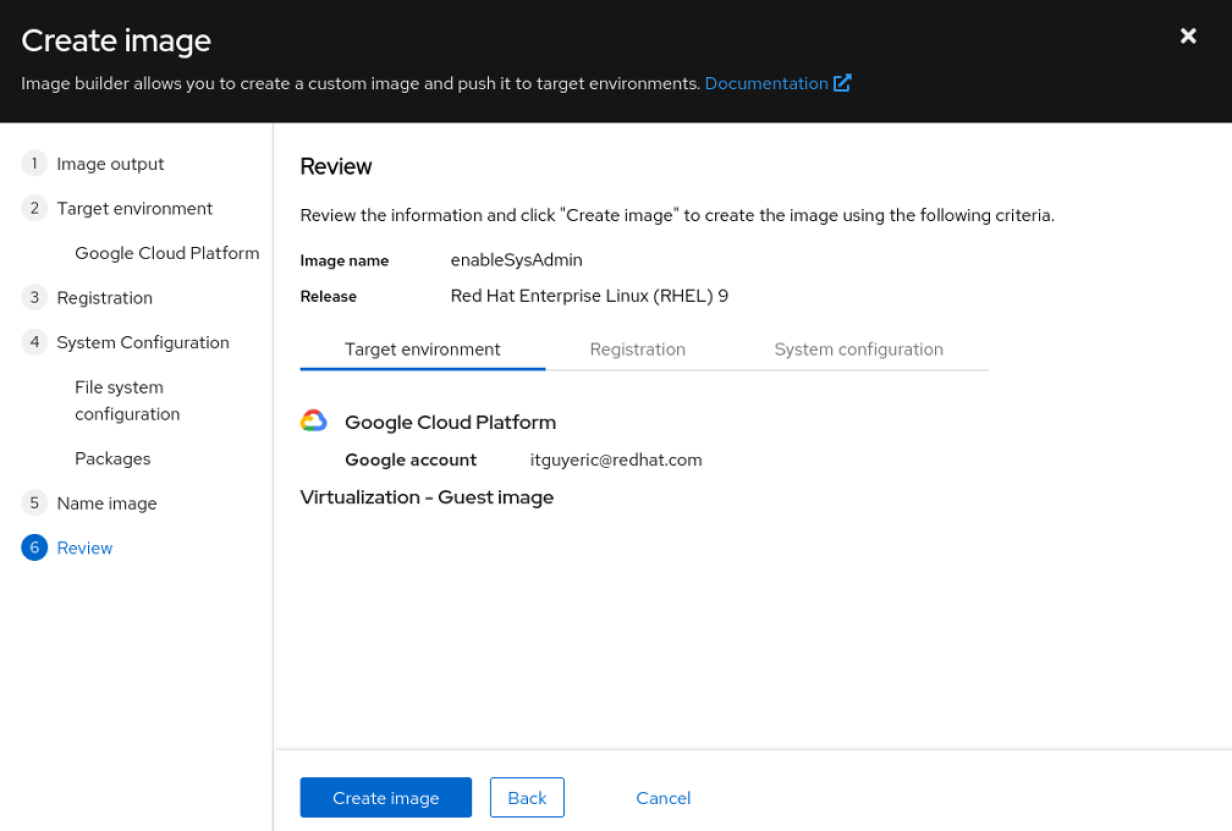

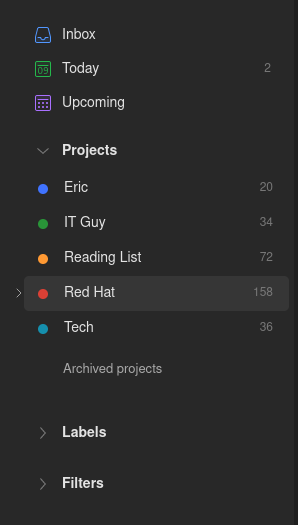

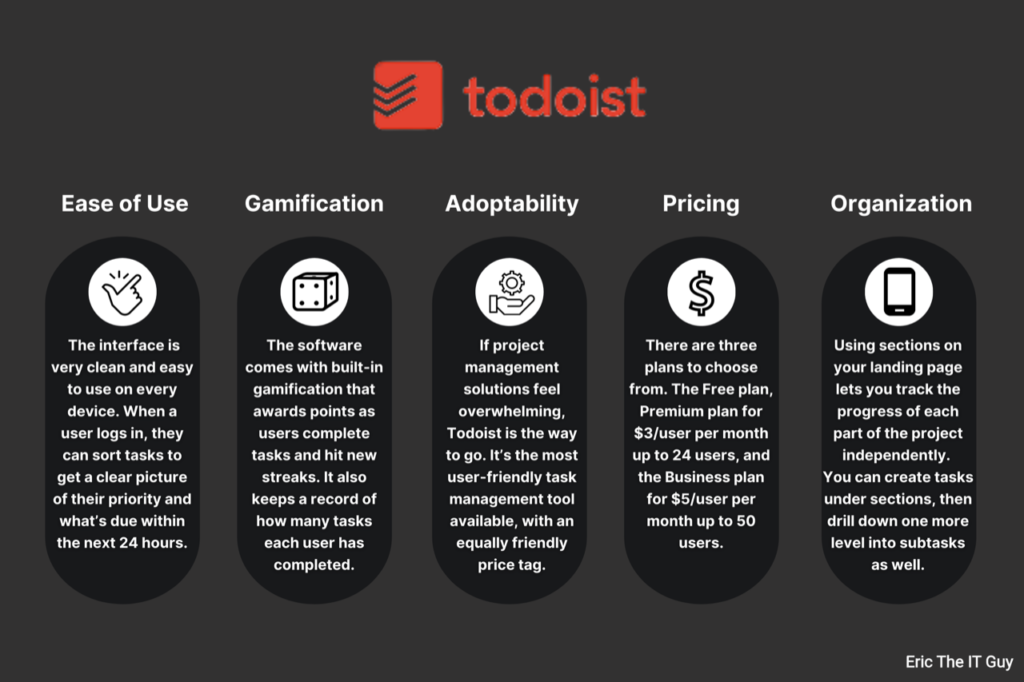

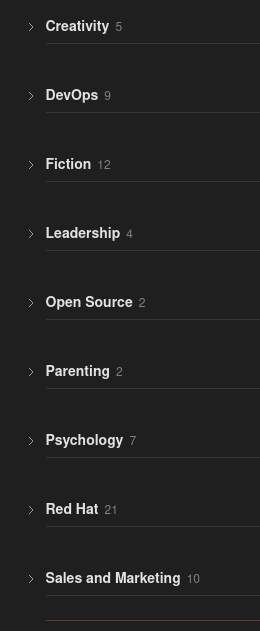

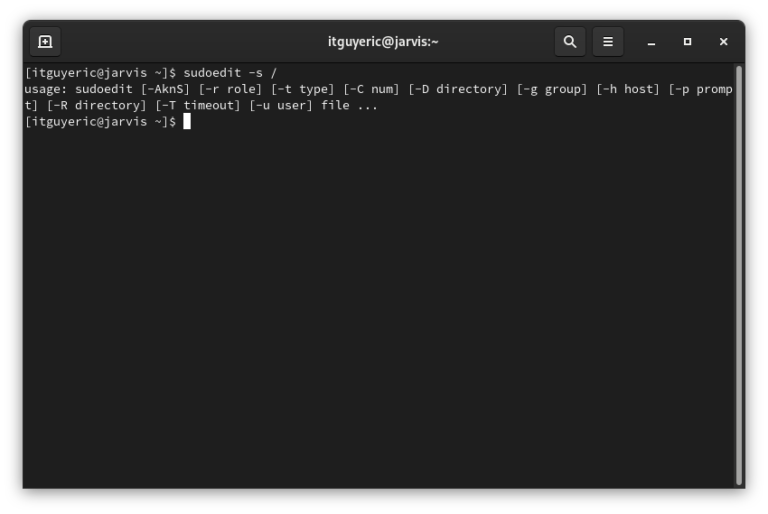

One concrete example of this approach in action was a recurring “What’s New” update for Red Hat Enterprise Linux, where the same core deck supported a live YouTube presentation, internal enablement, sales presentations, and on-demand viewing — all without maintaining separate versions.

The deliverables mattered, but the real value was consistency. Once teams knew what to expect, the updates became easier to consume, easier to share, and easier to trust.

Impact

The most noticeable change wasn’t a single metric — it was how people interacted with the updates.

Before this approach, release content tended to feel disposable. Watch it live, forget it, move on. Once the structure stabilized, the updates started behaving more like a reference point than an event.

Here’s what shifted over time:

- Clearer conversations: Sales and field teams stopped asking what they should focus on and started asking deeper follow‑up questions.

- Better reuse: The same deck showed up in customer calls, internal trainings, and partner briefings without needing to be rewritten.

- Higher engagement: Live sessions held attention longer, and recorded views stayed relevant well past the initial release window.

- More confidence: Stakeholders trusted the updates because they knew what they were getting — context, prioritization, and honesty about what mattered.

In at least one recurring release series, this approach contributed to sustained growth in live attendance and replay views, while reducing the amount of one‑off enablement work needed each cycle.

More importantly, it changed expectations. Product updates stopped feeling like a fire drill and started feeling like a known rhythm — something teams could plan around instead of react to.

Conclusion & CTA

What started as a way to survive release cycles eventually became one of the most reliable and successful product marketing tools in my toolkit.

The lesson wasn’t about slides. It was about respect — for the audience’s time, for the complexity of the product, and for the reality that most people don’t live inside a roadmap. When updates are framed with context, intent, and restraint, people don’t just consume them. They trust them.

This same model has worked across different products, different release cadences, and different audiences because the fundamentals don’t change:

- Start with why

- Curate instead of catalog

- Design for reuse

- Treat enablement as a system, not a one-off

If you’re building product updates, enablement content, or launch narratives and finding that they’re not landing the way you expect, this is usually where things break down.

If you’d like to talk about how this approach could apply to your product, your team, or your release motion, I’m always happy to compare notes.

—

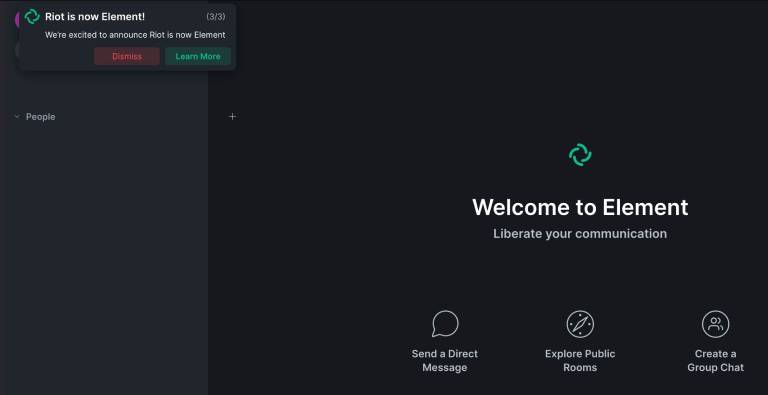

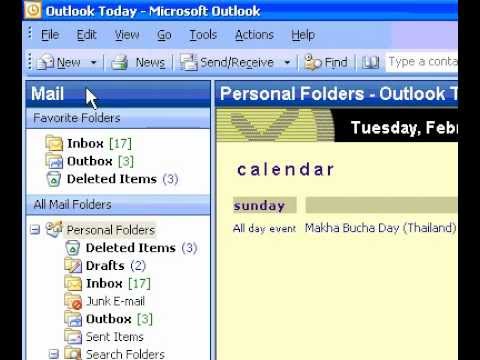

Photo by appshunter.io on Unsplash